2023-01-08: A Summary of "DocFormer: End to End Transformer for Document Understanding" (Appalaraju et al. 2021 ICCV)

Our previous blog described the importance of document understanding for layout analysis. While layout analysis is important, many downstream tasks, including document classification, entity extraction, and sequence labeling often require visual document understanding (VDU). VDU requires an understanding of both structures and layout of the document. There are VDU approaches based on only textual features or approaches based on both textual and spatial features. The best results are obtained by fusing textual, spatial, and visual features. Appalaraju et al. proposed DocFormer: End-to-End Transformer for Document Understanding at IEEE / CVF International Conference on Computer Vision in 2021, which incorporates a novel multimodal self-attention with shared embeddings in an encoder-only transformer architecture. DocFormer achieved state-of-the-art results on four various downstream VDU tasks. The contributions of this paper are:

- DocFormer has a multi-modal attention layer and incorporates text, vision, and spatial features.

- The authors proposed three pre-training tasks, of which two were novel unsupervised multi-modal tasks: learning-to-reconstruct and multi-modal masked language modeling tasks.

- DocFormer is an end-to-end trainable model that does not rely on a pre-trained object detection network for visual features.

In this blogpost, we will summarize the model, datasets used for various tasks, and evaluation results of DocFormer.

Model

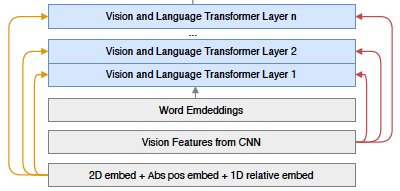

DocFormer is an encoder-only transformer architecture. It uses ResNet50 for visual feature extraction, and all components are trained end-to-end, meaning it does not use pretrained model weights. Although existing models, such as LayoutLMv2, concatenated visual and text into one long sequence and sent it to the attention layer, in DocFormer, the visual and text features go through the self-attention layer separately while they share spatial features. Before training the model using a dataset, DocFormer requires three input features -- visual, language, and spatial.

Visual Features

The visual feature preparation method is the following.

- The document image was fed through a ResNet50 convolutional neural network and downscaled in each layer. At the final stage, the shape was W, H, C, where W = width, H = height, and C = number of channels.

- Then, 1x1 convolution was applied to change the channel size to desired channel size. For example, (W, H, C) -> (W, H, D), where D = dimension.

- Flatten the layer and transpose. For example, (W, H, D) -> (WxH, D) -> (D, WxH).

- Next, a linear transformation was performed to change the last dimension size to N. For example, (D, WxH) -> (D, N).

- Lastly, transpose, i.e., (D, N) -> (N, D).

Language Features

Text was extracted using OCR from a document image. To generate language embeddings, the authors tokenized the text using a word-piece tokenizer and fed it through a trainable embedding layer. Sequences longer than 511 tokens are truncated. For documents with fewer than 511 tokens, the authors padded the sequence with a special token [PAD] and ignored this token during self-attention computation.

Spatial Features

For each token in the text, bounding box (bbox) coordinates were generated. For example, bbox = (x1, y1, x2, y2, x3, y3, x4, y4). This is a 2D spatial coordinates, and it provides the token's location with respect to the document it belongs to. The authors encoded the top-left and bottom-right coordinates using separate layers for x and y values, respectively. They also encoded width, height, Euclidean distance from each corner of the bbox to the corresponding corner in the bbox to its right, and distance between centroids of the bbox. Furthermore, transformer layers are permutation-invariant. The authors used absolute 1D positional encodings. This approach was proposed to create separate spatial encodings for visual and text features. This is because of the idea that spatial dependency could be different for visual and text features.

Multi-Modal Self-Attention Layer

|

| Figure 2: Multi-Modal Self-Attention Layer (source: Figure 7 from [Appalaraju et al. 2021 ICCV]) |

Figure 2 illustrates how the newly proposed self-attention differs from the vanilla transformer self-attention (e.g., Figure 2a). We can see that the proposed multi-modal method performed the self-attention for text and visual separately and merges them at the final stage.

Pretraining

|

| Figure 3: DocFormer Pre-training methodology (source: Figure 3 from [Appalaraju et al. 2021 ICCV]) |

Figure 3 illustrates the pre-training methodology of DocFormer. We can see OCR was applied to the document to get the text and spatial features. Furthermore, the visual feature was extracted. All of these features are fed to the transformer layer. We described that the authors proposed three pre-training tasks:

- Multi-Modal Masked Language Modeling: For a sequence of text, corrupt the text (masking) and make the model reconstruct it. Compared with other works, this task does not mask the image area of [MASK] tokens. Instead, it uses visual features to supplement text features.

- Learn to Reconstruct: The aim is to reconstruct the masked image. The final output feature of the [CLS] token will go through a shallow decoder to reconstruct the document image. The final multi-modal outputs are gathered and used to reconstruct the original image with the original image size. Since the input visual features were created by going through cnn -> (WxH, d) -> (d, WxH) -> (d, N) shape-changing process, the reverse can be done to get a reconstructed image from the final multi-modal global output.

- Text Describe Image: Using the [CLS] tokens' final output representation, add a binary classifier and try to predict if the input text matches the input document image. Different from the previous two tasks, which focus on local features, this task focuses on global features.

Dataset and Performance

The authors performed the sequence labeling, document classification, and entity extraction task. The authors used FUNSD, RVL-CDIP, CORD, and Kleister-NDA datasets for these experiments.

Sequence Labeling Task

FUNSD dataset is a form understanding task. It contains 199 noisy documents (149 train, 50 test) scanned and annotated. The authors focused on the semantic entity-labeling task. DocFormer-base achieved an 0.9633 F1 score which is better than comparable models such as LayoutLMv2-base (+0.58), BROS (+2.13), and LayoutLMv1 (+4.07). DocFormer-large achieved a similar performance despite being pre-trained with 5M documents.

Document Classification Task

For this task, the RVL-CDIP dataset was used. This dataset consists of 400,000 grayscale images in 16 classes, with 25,000 per class. Overall there are 320,000 training images, 40,000 validation images, and 40,000 test images. The authors report the classification accuracy. DocFormer-base achieved a state-of-the-art performance of 96.17% accuracy, whereas DocFormer-large achieved 95.50% accuracy.

Entity Extraction Task

The authors used the CORD and Kleister-NDA datasets for the entity extraction task. CORD consists of receipts. It defines 30 fields under four categories. The task was to label each word to the right field. The authors reported the F1 score as the evaluation metric. DocFormer-base achieved 0.9633 F1 on this dataset, whereas DocFormer-large achieved 0.9699. The Kleister-NDA dataset consists of legal NDA documents. The task with Kleister-NDA data was to extract the values of four fixed labels. DocFormer-based achieved 0.858 F1 on this dataset.

Conclusion

The authors proposed a multi-modal end-to-end trainable transformer-based model for VDU tasks. They described the novelty of the self-attention model and two novel pre-training tasks. Overall, DocFormer achieved state-of-the-art performance for different document understanding tasks.

Reference

S. Appalaraju, B. Jasani, B. U. Kota, Y. Xie and R. Manmatha, "DocFormer: End-to-End Transformer for Document Understanding," 2021 IEEE/CVF International Conference on Computer Vision (ICCV), 2021, pp. 973-983, doi: 10.1109/ICCV48922.2021.00103.

-- Muntabir Choudhury (@TasinChoudhury)

Comments

Post a Comment