2022-09-06: Anomaly Detection for Manufactured Computer Components' Failures: Summer Internship Experience at Microsoft Corporation

This Summer, I was accepted as a graduated student at the Quality Management System (QMS), a subdivision of Cloud Hardware and Infrastructure Engineering (CHIE) organization, at Microsoft Corporation located at Redmond, Washington, USA. Microsoft is an international organization, whose mission is to empower every person and every organization on the planet to achieve more. This year, Microsoft continued its student internship program for Summer 2022. Approximately 4000 students joined Microsoft in the USA to work on various projects during this Summer. Due to the current situation of the COVID-19 pandemic, most internships were an hybrid of both onsite and remote work.

My internship was a 12 weeks program which started on May 23rd, 2022. During this internship, I worked mostly remotely as a data scientist intern under the supervision of Nikolaj Lunoee and Kapil Jain. However, I visited the Redmond campus during the week July 25th through July 29th, 2022. Throughout this program, I attended daily meetings with the entire QMS team, and monthly meetings with the CHIE team. The daily meetings with the QMS team were to update my progress and to obtain feedback to resolve issues or to improve the solution. I usually have one-on-one meeting with my mentor Kapil Jain every day to discuss my progress and any issues faced. I also met with my manager Nikolaj Lunoee at least once a week.

Project

QMS Business Nature

The QMS team within the CHIE organization is responsible for systematically driving the quality of hardware components. One of the metrics used to monitor the quality of the different hardware components is the Part Replacement Rate (PRR). PRR is obtained from the hardware components that failed after installation in the data center. Examples of the different components the QMS monitors includes memory modules, solid state drive, etc. These components are present in different servers located in different computer racks from multiple data centers. These components also consists of thousands of different sub-parts, which makes it infeasible to manually monitor the different parts within each component. The current manual approach could make a faulty part go undetected for a long time, which in turn can negatively affect other proper functioning parts. Thus, the goal of my project was to design an anomaly detector that can automatically track the performances of these different parts and components.

I began my internship by first familiarizing myself with the QMS domain and the PRR data, by reading several documentations and taking relevant courses to help my understanding of the problem I was to tackle. I also scheduled several one-on-one meetings with each member of the team to obtain details about each person's work, and contributions. I also familiarized myself with Microsoft Azure Synapse Analytics and Azure Machine Learning platforms, since these are the platforms my team uses to carry out their operation.

I started by first exploring the data to get some basic understanding of the features in the data. Then, I conducted both univariate and bivariate analysis of the different features of the data using Python packages such as Plotly. I also performed a lot of data preprocessing and data cleaning to prepare the data for modeling. One of the challenges I faced with the data was differentiating between PRR anomaly and an accepted variation. I resolved this by using a modified z-score (a statistical approach) to find a threshold between anomalies and an accepted variation. Then, I used this threshold to create a ground truth for the data in other to evaluate the performance of my models.

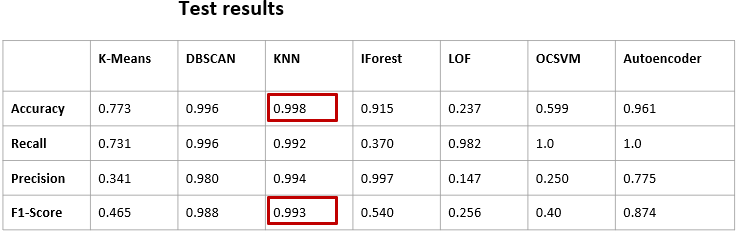

Then, I proceeded with exploring different anomaly detection methods such as Unsupervised K-Nearest Neighbors, Isolation Forest, Density-Based Spatial Clustering of Applications (DBSCAN), and Autoencoders.

Summary of Explored Anomaly Detection Models

- K-Nearest Neighbors (KNN): KNN is a supervised learning algorithm, but in the context of anomaly detection, it functions in an unsupervised manner. It belongs to the nearest-neighbors family. The fundamental assumption of this family of models is that similar observations are in proximity to each other and outliers are usually lonely observations, staying farther from the cluster of similar observations. I used the KNN model available in the Python for Outlier Detection (PyOD) packages. I optimized its parameters using Grid Search with cross-validation approach to improve the model performances.

- Isolation Forest (IForest): It isolates observations by randomly selecting a feature and then randomly select a split value between the maximum and minimum values of the selected feature. Similar optimization approach was used for improving IForest model.

- K-Means: It randomly selects initial cluster centroids based on the specified number of clusters, then it assigns each observation to the closest centroid. This process continues until the centroids computation no longer changes. I used the silhoutte score metrics to select an optimal number of clusters for my K-means model.

- Density-Based Spatial Clustering of Applications (DBSAN): It finds core samples of high density and expands clusters from them. It works well for data which contains clusters of similar density. One of the most important parameters to optimize for DBSCAN is the "eps" (maximum distance between two samples for one to be considered as in the neighborhood of the other) parameter. So, I used the nearest neighbor techniques to find the optimal values of eps to use.

- Local Outlier Factor (LOF): It measures the local deviation of the density of a given observation with respect to its neighbors. The anomaly score of each observation is called LOF. It is local in that the anomaly score depends on how isolated the object is with respect to the surrounding neighborhood. I used LOF in the context of novelty detection, and optimized it with Grid Search method.

- One Class Support Vector Machine (OCSVM): It is used to estimate the support for a high-dimensional distribution. I mainly optimized the kernel and tolerance hyper-parameters of the model.

- Autoencoder: It is a type of neural network that is trained to copy its input to output. It first internally compress its input to a lower latent space representation, and then expands it out back to the original input dimension. The underlying assumption is that noise (outlier) gets removed during this compression. I optimized the model parameters using keras-tuner.

Comments

Post a Comment