2022-08-19: Disinformation Detection and Analytics REU Program - Final Summer Presentations

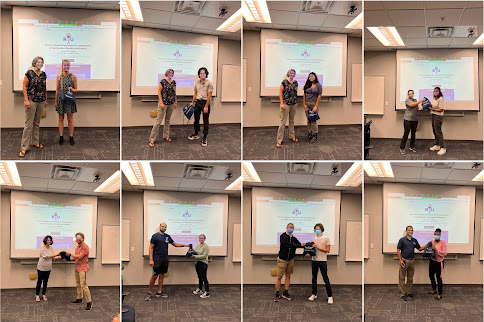

The Web Science & Digital Libraries Research Group (@WebSciDL) within the Old Dominion University Department of Computer Science (@oducs), a 2022 National Science Foundation (NSF) Research Experiences for Undergraduates (REU) site in Disinformation Detection and Analytics, invited several students to participate in research as a part of a 10 week summer internship program. The eight students, who previously briefed their mid-summer progress, gave final presentations of their research on Thursday, August 4, 2022. Their mentors, other faculty members, and students in the CS department joined the final presentation session in person and virtually to learn more about their research outcomes. Being present in the building came with a few perks to include pineapple pizza.

Our @oducsreu 2022 cohort is presenting their final project work today August 04, 1-4pm, Tune into #oducsreu for live tweeting of the presentations. We are enjoying the lunch, did I tell you that we have pineapple pizza ;) @WebSciDL @oducs @ODUSCI pic.twitter.com/nVY8Az35Gk

— Sampath Jayarathna (@OpenMaze) August 4, 2022

With no judgment on others’ choices for pizza toppings, the group prepared for an afternoon of learning that would focus on how to uncover mis- and disinformation in news and social media, their impacts on readers, and methods for gathering and analyzing related data.

Autumn Woodson (@AWoods_n) advised by Dr. Sampath Jayarathna (@OpenMaze)

Autumn kicked off the program with a presentation on "Human Interaction With Fake News." In her project, she studied the reading patterns of participants using eye tracking data collected while reading fake versus real news articles. The goals of this project were to study reading patterns of participants and to use machine learning methods to identify direct measurements for analyzing engagement. This study was an extension of an eye-tracking study published at ETRA 2022 by combining pupillometric information.

The Final Presentation session of the @oducsreu program has kicked off with Autumn's presentation on "Human Interaction With Fake News". In her project, Autumn studied the reading patterns of participants while reading fake new articles. #oducsreu@WebSciDL @oducs @ODUSCI pic.twitter.com/uoWUxkaTOx

— Yasasi (@Yasasi_Abey) August 4, 2022

Isabelle Valdes (@isabellefv_) advised by Dr. Erika Frydenlund (@ErikaFrydenlund)

Isabelle shared her work on “Networks of Disinformation: The Proliferation of Hate Speech in Chile and Colombia During the Venezuelan Migration Crisis”, in which she analyzed how the spread of disinformation affects the migrants. She focused on the impacts to Venezuelan refugees, such as the formation of stereotypes that they are responsible for crimes. In this project, she identifies networks that spread such disinformation using graph cluster algorithms and interactions among social media users.

.@isabellefv_ is presenting her project “Networks of Disinformation: The Proliferation of Hate Speech in Chile and Colombia During the Venezuelan Migration Crisis” (mentor @ErikaFrydenlund) at @oducsreu final presentation session. #oducsreu@WebSciDL @oducs @ODUSCI pic.twitter.com/NwHS92suoM

— Yasasi (@Yasasi_Abey) August 4, 2022

Ethan Landers (@ethanlanders_) advised by Dr. Jian Wu (@fanchyna)

Ethan presented “An Assessment of Scientific Claim Verification Frameworks”, in which he detected misinformation in the scientific community. In this project, his goal was to automate scientific claim verification. For this, he has used the state-of-the-art MultiVerS model with SciFact dataset. The research questions he attempted to solve are: (1) how does the model trained on SciFact do with detecting open-domain scientific disinformation; and (2) how does the model trained on other datasets do with detecting open-domain scientific disinformation?

Now in @oducsreu final presentations, @ethanlanders_ is presenting his project titled, “An Assessment of Scientific Claim Verification Frameworks”. In this work, he has used the state-of-the-art MultiVerS model with SciFact dataset. (His mentor @fanchyna) #oducsreu @WebSciDL pic.twitter.com/9G6dWQuRRo

— Yasasi (@Yasasi_Abey) August 4, 2022

Dani Graber (@compsci_dani) advised by Dr. Anne Perrotti (@slpmichalek)

Dani presented her research on “Disinformation About Mental Health on Tiktok.” During the presentation, she discussed how disinformation on mental health issues, such as ADHD, DID, and Autism has spread on Tiktok. She further explained how this disinformation could lead to improper self-diagnosis and a lack of proper medical treatment among the social media users. Dani also discussed why people want to post about their mental health issues on Tiktok, highlighting potential fame, fans, and monetary benefits.

At the Final Presentation session of the #oducsreu, now presenting @compsci_dani (mentor @slpmichalek) her work on “Disinformation About Mental Health on Tiktok”. She studied how disinformation on mental health issues such as ADHD, DID has spread on Tiktok. @WebSciDL @oducs pic.twitter.com/jZAT28Qn0q

— Yasasi (@Yasasi_Abey) August 4, 2022

Michael Husk (@mhusk_3) advised by Dr. Faryaneh Poursardar (@Faryane)

Michael presentation was entitled "Fake Review Detection", in which he identified fake reviews posted on review websites such as Amazon, Yelp, and Trip Advisor. Throughout his work, he found that 93% of customers read reviews when purchasing a product online, and most of the companies will offer rewards for posting reviews regardless of whether a person actually purchased the item. This boosts the tendency to post fake reviews. In the fake review detection task, Michael has achieved an accuracy of ~ 80% with the Multinominal Naive Bayes model on the Amazon Review dataset. He also has experimented with other machine learning models, such as Logistic Regression, Bernoulli Naive Bayes, Random Forests, SVM, and BERT to detect the fake reviews.

Michael Husk @mhusk shares his work on “Fake Review Detection” with those attending the @oducsreu program 2022 final presentations. #oducsreu @WebSciDL @oducs @ODUSCI pic.twitter.com/iBVkhYnzKM

— Bathsheba Farrow (@sheissheba) August 4, 2022

Ash Dobrenen (@Kat9951) advised by Dr. Vikas Ashok (@vikas_daveb)

Ash presented the "Protect Blind Screen-Readers From Deceptive Content" project, in which they studied detecting and alerting blind users about deceptive content on web pages and developed an intelligent browser extension to identify deceptive content. They have used hand-crafted features combined with machine learning algorithms for the detection of deceptive content.

Next @oducsreu final presentations: @Kat9951 presenting their study (mentor: @vikas_daveb ) "Protect Blind Screen-Readers From Deceptive Content" by developing an intelligent browser extension to identify deceptive content. #oducsreu @WebSciDL @accessodu @NirdsLab @oducs @ODUSCI pic.twitter.com/M8IePBf6jK

— Bhanuka Mahanama (@mahanama94) August 4, 2022

Haley Bragg (@haleybragg17) advised by Dr. Michele C. Weigle (@weiglemc)

Haley presented her work on “How Well is Instagram Archived through the Years,” which is an extension of Himarsha Jayanetti’s (@HimarshaJ) work on how well Instagram pages are covered in public web archives. Haley worked on developing methods for gathering metadata from CrowdTangle API and Memento collection. Throughout her work, she found that Instagram itself is not well-archived, and 96.13% of mementos from the Disinformation Dozen's accounts redirect to the login page, and only 27.16% of replayable mementos for the Disinformation Dozen replay every post image. She also found that overall, only 1.05% of mementos for the Disinformation Dozen accounts are replayable with complete post images.

Haley Bragg @haleybragg17 briefs her work on “How Well is Instagram Archived through the Years” during the @oducsreu 2022 program final presentations #oducsreu @WebSciDL @oducs @ODUSCI pic.twitter.com/A51Cco0WB2

— Bathsheba Farrow (@sheissheba) August 4, 2022

Caleb Bradford (@calebkbradford) advised by Dr. Michael Nelson (@phonedude_mln)

Caleb presented his work on "Did they really tweet that?". In his project, Caleb tries to identify fabricated tweets through fact-checking and verification techniques. He establishes the probability of what public figures said, did not say, or said and then deleted. He utilized Politwoops, Snopes.com, Factcheck.org, and Google to query the tweets and collect evidence for the verification.

Final presentation @oducsreu presentations: @calebkbradford presenting "Did They Really Tweet That?" (mentor: @phonedude_mln). The project attempts to identify fabricated tweets through fact-checking and verification techniques. #oducsreu @WebSciDL @NirdsLab @oducs @ODUSCI @NSF pic.twitter.com/KejpypYQ2r

— Bhanuka Mahanama (@mahanama94) August 4, 2022

-- Bathsheba (@sheissheba) and Gavindya (@Gavindya2)

Comments

Post a Comment