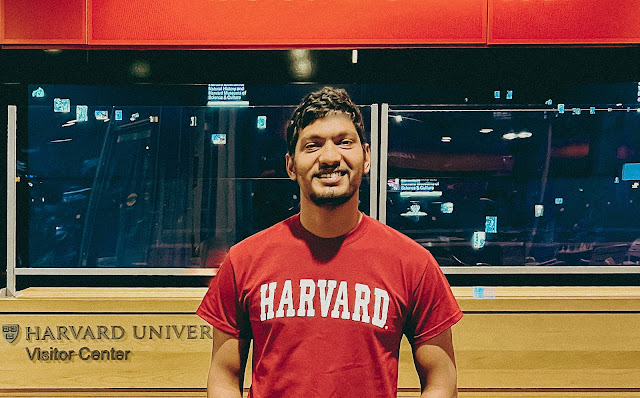

2022-06-21: My Visiting Fellowship at Harvard

In Spring 2022, I was honored with the opportunity of being a visiting fellow at the Center for Advanced Imaging at Harvard. This center is a part of Harvard's Faculty of Arts & Sciences. It is located in the Northwest Building of Harvard, which is a multi-disciplinary research facility designed to enhance collaboration between neuroscience, bioengineering, computational analysis, and other disciplines. This center is spread across two floors in the Northwest Building, with an office suite on the ground floor, an imaging laboratory on the B4 floor, and also open spaces, optics rooms, environmentally-controlled rooms, and support spaces. Since its inception, this center is/was home to several labs including the Babcock Lab, the Seeber Lab, the Cohen Lab, and the Wadduwage Lab.

|

| Northwest Building, Harvard University |

At this center, I worked under Dr. Dushan Wadduwage of the Wadduwage Lab for Differentiable Microscopy (∂µ). His lab works on novel computational microscopy solutions to measuring biological systems at their most information rich form, with minimum redundancy.

|

| Workspaces at the Center for Advanced Imaging at Harvard |

Every Monday, we had a group meeting where one member presented a novel and interesting deep learning technique with parallels to ongoing research at the lab. This was a good opportunity to stay up to date on cutting edge machine learning research, and also to learn about them intuitively. Also I participated in several collaboration meetings to understand the types of research conducted at the lab.

Things I Did

I was involved in developing interpretable deep learning methods to detect antimicrobial resistant (AMR) bacteria through quantitative phase imaging (QPI). For this project, I used explainable AI techniques to visualize what the deep learning models have learned from data, and by doing so, to justify their predictions. I also explored methods to quantify the uncertainty of computer vision models trained to predict a small subset of the population, while the population of real-world samples expand beyond this subset (e.g., classifiers trained to predict one of N classes, with the actual number of classes >> N). By formulating this as an open-set recognition problem, we explored hybrid model architectures to implicitly learn the joint distribution of image samples and their respective class labels, and thereby estimate the model's predictive uncertainty.

Things I Learned

During this period, I learned the basics and challenges of diffraction-limited imaging, and techniques such as confocal microscopy and super-resolution microscopy (e.g., STED, SSIM, STORM, and PALM) that were designed to overcome these challenges. I also gained exposure to fascinating syntheses of deep learning and optics, which utilize physics-based optical models (with differentiable functional forms) within deep neural models for end-to-end learning.

I also explored a relatively new class of deep learning models, i.e., Normalizing Flows and Invertible Neural Networks (INNs). I also spent time learning the mathematical concepts behind some recent deep learning advancements (concepts such as Geometric Measure Theory and Calculus of Variations) and brushing up my knowledge on Probability and Statistics.

Final Thoughts

I’m eternally grateful to Dr. Dushan Wadduwage for providing me this opportunity, and for his valuable feedback and insightful discussions when exploring new ideas. I’m also thankful to Dr. Sampath Jayarathna for his consistent guidance and support towards this opportunity. I hope to continue exploring this area of research on a collaborative basis in the future.

-- Yasith Jayawardana (@yasithdev)

Comments

Post a Comment