One of the research thrusts I'm currently pursuing is evaluating how much we can trust crowd sourced data provided by users in a large multi-user network with social components. As a first step I've been considering some popular user-data networks and current efforts in literature in order to gain an understanding of some of the general dynamics of such systems. As you'll see, these dynamics can be best described, from a very high level, as a dependency on the interactions between users or, in some cases, autonomous agents, and their behavior in the system. The ultimate goal is to consider the quality of data the users/agents provide as their "behavior."

These networks need not necessarily be social networks but can include social-centric services such as Waze (a navigation application that uses user provided data on current traffic conditions and locations), Glassdoor (a job search and company information site that uses user provided data on salaries and benefits), and Spotangels (a community-based application for finding parking spots) just to name a few examples.

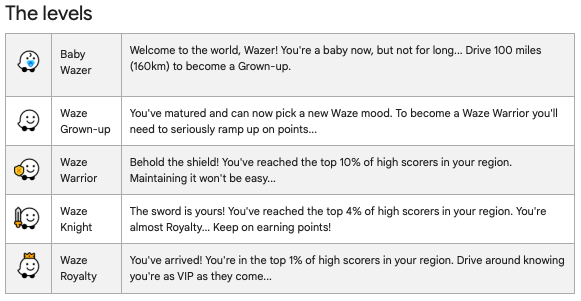

Waze is of particular interest due to how much it relies on user-provided data for a time-sensitive activity like driving. Two of the main ways users participate in the Waze community are through map editing and reporting traffic conditions or road hazards like a stopped vehicle or police presence. As users drive in an area they can send reports about traffic conditions to warn other drivers, and in turn the users who report the condition earn experience points (XP) which contribute toward the different user levels one can reach by using Waze.

Points are allocated according to different actions like reporting incidents and achievements:

|

Points per report and achievement (source: waze.com)

|

and also distance traveled:

|

| Points per distance traveled/trajectory followed (source: waze.com) |

Drilling down further, Waze assigns two scores to each incident reported by a user -

confidence and reliability These scores have related but opposing frames of reference. Confidence is respective to how other users interact with the report, either reporting it as an active incident and the report as accurate or that the incident is "not there" and is no longer accurate. Reliability, on the other hand, scores how much we should be able to trust the source of this report (the reporting user) based on the source's experience level. Each of these scores runs on a range from [0,10] with higher scores indicating more confidence or reliability.

This interplay between the temporal accuracy of the data and how much trust we have for the user providing the data is key to providing a stable and accurate system based on user provided data.

Furthermore, I've been doing extensive literature review to further explore how we can measure trust within the system between users. In

Trust Evaluation Based on Evidence Theory in Online Social Networks, Wang et al. find that the relationship between user features and a trust decision relies not only on features of the user themselves (in the case of Waze this would be the user's experience level) but also on the "subjective options of the user," meaning which features of the user does another user prefer when making a trust decision. Additionally, they also fold information flow probability into their trust determination scheme in order to provide evidence that any data provided between the user we're evaluating and another can be trusted to not be leaked to malicious actors. Here, the confidence in the data is constrained to the idea of privacy leakage but again, we see the interplay between user reliability and data confidence.

Where

Wang et al. evaluated users based on prescribed user features, Lin et al. opt to generate latent trust features between users in

Guardian: Evaluating Trust in Online Social Networks with Graph Convolutional Networks. This paper introduces the use of

graph convolutional networks to evaluate the pair-wise trust between two users in a social network. They describe two key properties of trust in a social network, the propagative and composable nature of social trust. The propagative nature of social trust "refers to the fact that trust may be passed from one user to

another, creating chains of social trust that connects two users

who are not explicitly connected." Whereas the composable nature of social trust "refers to the fact that trust needs to be aggregated

if several chains of social trust exist." One of the challenges they identify is how they can represent these two properties as a joint metric. Additionally, they recognize the asymmetric nature of pair-wise trust, where one user may have more trust for the other user than they receive back, and the challenge of representing this unbalanced relationship. To do so they measure popularity and engagement trust - the degree with which other users trust the user under consideration and said user's willingness to engage with other users, respectively. Each of these values represent an application dependent category of user, much like Waze's experience levels. Finally they convolve both measures into a single value using a fully connected layer.

Finally, a user-data network need not necessarily consist of human-to-human relationships and interactions. An

Internet of Things (IoT) consisting of edge device sensors providing data to its network for consumption of either other edge sensors or a human user represents an interesting environment to evaluate trust. Here, the idea of a user can be abstracted into the idea of a more general agent since the network can consist of a heterogeneous mix of sensors and users. Each sensor can be assigned trust values based on historical performance and interactions but may eventually 'misbehave' in the sense of a malfunction degrading the accuracy of the data it provides. In

Trust-Based Model for the Assessment of the Uncertainty of Measurements in Hybrid IoT Networks, Cofta et al. use the idea of measuring trust, confidence, and reputation to lower an IoT network's total uncertainty. The idea is roughly the same as the other two previously mentioned papers. They measure the trust and confidence each sensor enjoys from other sensors in the network, which allows them to apply a value for how much uncertainty said network's data introduces into the network. A sensor with a lower uncertainty value can therefore have more impact on the network than one with higher uncertainty. This framework is particularly interesting because uncertainty measurement is related to the quality of the data being provided to the network and not reliant on the much noisier nature of human-to-human relationships while still considering the interplay between trust - this time among edge sensors - and the quality of their data over time.

Through literature review and use-case development, I've started to formulate some notional research questions:

- Can we leverage known high accuracy sources? In other words, can we use sources we can implicitly trust as a kind of ground-truth?

- Can we leverage source/agent behavior to model a source trust coefficient? Can we do so without input from other agents?

- Can we use both user behavior and the quality of service (QoS) of their data provision as a metric?

- Is there a model for conformant data and can we detect anomalous data from network consensus?

With these questions I believe I can start to investigate novel approaches to modeling user-data network dynamics, with the goal of doing so without application-specific dependencies and with sufficient generality to consider human-to-human social networks and networks involving autonomous agents. We've seen how networks like Waze leverage trust and reputation to create a stable network of accurate data. Then we looked at some representative literature for methods of measuring that user trust in networks and saw how certain approaches, like in

Cofta, include data quality in their assessment. By looking at these we can see a general pattern of how user behavior and relationships jointly represent the features we need to look at in order to evaluate a user's trust level.

Bibliography

[1] Wang, Jian & Qiao, Kuoyuan & Zhang, Zhiyong. (2018). Trust evaluation based on evidence theory in online social networks. International Journal of Distributed Sensor Networks. 14. 155014771879462. 10.1177/1550147718794629.

[2] W. Lin, Z. Gao and B. Li, "Guardian: Evaluating Trust in Online Social Networks with Graph Convolutional Networks," IEEE INFOCOM 2020 - IEEE Conference on Computer Communications, 2020, pp. 914-923, doi: 10.1109/INFOCOM41043.2020.9155370.

[3] Cofta, Piotr & Orłowski, Cezary & Lebiedź, Jacek. (2020). Trust-Based Model for the Assessment of the Uncertainty of Measurements in Hybrid IoT Networks. Sensors. 20. 6956. 10.3390/s20236956.

~ Jim Ecker

Comments

Post a Comment