2020-12-23: UI Automation: a new prospect in accessibility

Fig: Pipeline for Accessibility API

UI Automation (UIA) is an ordered tree of automation elements with a single interface for navigating the tree. This framework enables a portal to the user by providing and interpreting programmatic information from windows applications about user interfaces (UI). It helps to make products more accessible for everyone including people with disabilities all over the world. This not only offers programmatic access to most of the UI components but also allows assistive technology-based applications, such as screen readers, to provide users with knowledge about the UI and to control the UI.

For the assistive technologies, UI Automation ensures that technology has access to important information about what is taking place in a particular user interface such as how a system will react if

- A button is pressed

- A list is opened

- A menu selected

- A combo box expanded.

Assistive technology-based applications, such as screen readers or speech recognition programs, use this information exposed through user interface automation to give the user information to help them navigate and interact effectively with an interface.

Designing of UIA

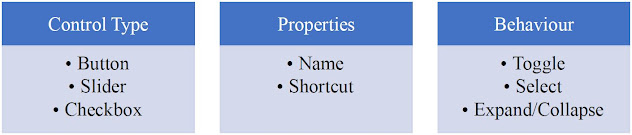

For applications, to work best with assistive technology, designers must peel through the layers of what is in a UI and ensure UIA represents it correctly and coherently. So, with any layer of UI, one must know the type of control, such as a button, or combo box, you want the user to interact with. Once you know the control type, the stage is set for other user expectations. For instance, when they encounter a link, they expect to be taken to a different section on the page or a different web page or application. From a designer's perspective, you need to correctly interpret what the control type is.

For instance, the control type for the "Bold" button is a button that is different than the control type of the font combo box in Microsoft Word. There

are 40 control types are available till now and they are listed below:

Hierarchy of elements

Within the hierarchy, the usage of adequate nesting of elements allows for successful navigation when a person uses touch input. A person may use the narrator to swipe to explore the elements in the UIA tree. Touch navigation becomes confusing and frustrating if the hierarchy, including ordering and parent/child relationships, is not clear. It is essential to treat every relevant property in its most atomic format. If the user selects one property and jams all the other related pieces of information into it, the screen reader will have a hard time dissecting the information and then speaking to each piece when it becomes necessary depending on the user's settings.

For example, if a user is unfamiliar with Office or text formatting, they cannot understand what Bold is doing. But they need information when they first check it, such as its name is Bold. The "Bold" button aims to make the text bold as well as its keyboard shortcut is “CTRL+B”. When you break down Bold button properties in its name, help information, and keyboard shortcut, different assistive technologies will pull each of them individually at the user's request, depending on the level of verbosity set by the user.

How UIA works in Assisted technology

Nowadays, many assistive applications use the accessibility of UIA API. One of the common examples is the use of the narrator screen reader, and another one is the magnifier in Windows. They are both pre-installed in Windows. In Magnifier, a subset of UIA information is used to know where the user is on the screen and then magnify the area. Magnifier used UIA to learn when the focus of the keyboard changes, what the bounding rectangle is of the power that the focus of the keyboard achieves. When a user zoomed into the document where they were typing and want to change the way the font looks, it needs to press the Alt key, and notice that focus has moved to the ribbon on the top left of the screen, which is where the keyboard focus is right now. As the user moves around, the magnifier follows.

Fig: Magnifier focusing on the Bold button in Microsoft Word.

On the other hand, the narrator just gave the context of where the user surfing on the screen, which is inside the document, inside an application called Word. If the user moves focus to the Bold button. It will say “Off”, “Bold Button”, “Alt H 1”, “Control + B”, “Make your text bold”. You will hear a voice from the screen reader saying which element you are on, what its keyboard focus is, and some extra instruction of what that command does. The screen reader knows how to do that based on those components, or anatomy, from the UIA data that we have already plugged in, as opposed to it all being jammed into a single property on that element. The screen reader looks at the control type then for properties, its keyboard shortcut, and that it is announced as on versus off.

Summary

To explore an application, from the developer's perspective, you need to have access to all the functionality efficiently, whether through one or both representations visually and programmatically. UIA takes every attempt to create and maintain a reliable contact channel between the user and the screen reader, allowing the screen reader to access the details of the application. In the next blog, with some examples of UIA hierarchies, properties, patterns, or events, I will discuss a real-world application that has played some part in accessibility-based research.

-- Md

Javedul Ferdous (@jaf_ferdous)

Comments

Post a Comment