2020-06-10: Hypercane Part 2: Synthesizing Output For Other Tools

|

| This image by NOAA is licensed under NOAA's Image Licensing & Usage Info. |

In Part 1 of this series of blog posts, I introduced Hypercane, a tool for automatically sampling mementos from web archive collections. If a human wishes to create a sample of documents from a web archive collection, they are confronted with thousands of documents from which to choose. Most collections contain insufficient metadata for making decisions. Hypercane's focus is to supply us with a list of memento URI-Ms derived from the input we provide. One of the uses for this sampling is summarization. The previous blog post in this series focused on its high level

sample and report actions and how they can be used for storytelling. This post focuses on how to generate output for other tools via Hypercane's synthesize action.

|

| The goal of the DSA project: to summarize a web archive collection by selecting a small number of exemplars and then visualize them with social media storytelling techniques. Hypercane performs this sampling, Raintale renders the visualization of the summary. |

Our roadmap of Hypercane posts is as follows:

- Hypercane Part 1: Intelligent Sampling of Web Archive Collections - an introduction to Hypercane

- Hypercane Part 2: Synthesizing Output For Other Tools - this post

- Hypercane Part 3: Building Your Own Algorithms - this is where we higlight Hypercane's identify, filter, cluster, score, and order actions

synthesize outputs with Raintale, Archives Unleashed Toolkit, and Gensim. Like Part 1, this post will continue to use IIPC's COVID-19 Archive-It collection 13529.

As with other Hypercane commands, to view items available for use with the synthesize action, we use the --help argument.

# hc synthesize --help

'hc synthesize' is used to synthesize a web archive collection into other formats, like WARC, JSON, or a set of files in a directory

Supported commands:

* warcs - for generating a directory of WARCs

* files - for generating a directory of mementos

* bpfree-files - for generating a directory of boilerplate-free mementos

* raintale-story - for generating a JSON file suitable as input for Raintale

* combine - combine the output from several Hypercane runs together

Examples:

hc synthesize warcs -i archiveit -a 694 --depth 2 -o output-directory -cs mongodb://localhost/cache

hc synthesize files -i timemaps -a timemap-file.tsv -o output-directory -cs mongodb://localhost/cache

hc synthesize raintale-story -i mementos -a memento-file.tsv -o story.json -cs mongodb://localhost/cache

Synthesizing JSON for Raintale

In Part 1, I provided the list of Hypercane and Raintale commands used to generate the story shown below. Now I will detail how the

synthesize command combines these items together.  |

| The story from the previous post, generated from Archive-It collection 13529. |

The commands listed below create the data needed to tell the story. The

report commands, covered in the previous post, provide the metadata, entities, terms, and image ranking used to generate the final story.

# cat create-story-for-archiveit-13529.sh

#!/bin/bash

export HC_CACHE_STORAGE=mongodb://localhost/cache13529

hc sample dsa1 -i archiveit -a 13529 -o story-mementos.tsv

hc report metadata -i archiveit -a 13529 -o metadata.json

hc report entities -i mementos -a story-mementos.tsv -o entities.json

hc report terms -i mementos -a story-mementos.tsv -o sumgrams.json --sumgrams

hc report image-data -i mementos -a story-mementos.tsv -o imagedata.json

hc synthesize raintale-story -i mementos -a story-mementos.tsv \

--imagedata imagedata.json --termdata sumgrams.json \

--entitydata entities.json --collection_metadata metadata.json \

--title "Archive-It Collection" -o raintale-story.json

tellstory -i raintale-story.json --storyteller template \

--story-template raintale-templates/archiveit-collection-template1.html \

--generated-by "AlNoamany's Algorithm" \

-o story-post.html

Like other Hypercane commands, the synthesize action's raintale-story command (shown in red above) accepts a list of memento URI-Ms, an Archive-It collection ID, a list of TimeMaps URI-Ts, or a list of original resource URI-Rs. From there, it will generate a JSON-formatted Raintale story file from the input. Click below to expand a sample of that JSON file from the story for Archive-It collection 13529.

# cat raintale-story.json

{

"metadata": {

"id": "13529",

"exists": true,

"metadata_timestamp": "2020-04-21 01:55:36",

"name": "Novel Coronavirus (COVID-19)",

"uri": "https://archive-it.org/collections/13529",

"collected_by": "International Internet Preservation Consortium",

"collected_by_uri": "https://archive-it.org/organizations/769",

"description": "A collection created by the Content Development Group of the International Internet Preservation Consortium in collaboration with Archive-It to preserve web content related to the ongoing Nove

"subject": [

"Science & Health",

"Spontaneous Events",

"Novel Coronavirus (Covid-19)",

"Epidemics",

"Coronavirus infections",

"COVID-19 Epidemic"

],

"archived_since": "Feb, 2020",

"private": false,

"optional": {

"creator": [

"International Internet Preservation Consortium"

],

"collector": [

"International Internet Presevation Consortium"

]

},

"terms": [

"covid 19",

"public health",

"covid 19",

"the centers for disease control and prevention",

"the world health organization"

],

"entities": [

"china",

"wuhan",

"cdc",

"japan",

"chinese"

]

},

"title": "Archive-It Collection 13529: Novel Coronavirus (COVID-19)",

"elements": [

{

"type": "link",

"value": "http://wayback.archive-it.org/13529/20200305194811/http://www.taipeitimes.com/News/taiwan/archives/2020/02/23/2003731479"

},

{

"type": "link",

"value": "http://wayback.archive-it.org/13529/20200327080631/http://www.timiskaminghu.com/90484/COVID-19/"

},

... truncated for brevity ...

],

"story image": "https://wayback.archive-it.org/13529/20200315123158/https://www.gannett-cdn.com/presto/2020/01/30/USAT/bd6436a9-a4c7-4647-b560-6ee9b95baf66-AFP_AFP_1OJ1R4.jpg?crop=4623,2601,x0,y293&width=3200&hei

}

hc synthesize raintale-story also accepts a number of optional arguments.

The --imagedata argument specifies a file containing the output of hc report imagedata. This argument instructs Hypercane to find the highest scoring image in that report and make its URL the value of the story image key in the resulting JSON file. Raintale knows to use this value as the overall striking image of the story it renders.

The --collection_metadata argument specifies a JSON file whose contents Hypercane should include under the metadata key of the output. The --termdata and --entitydata arguments instruct Hypercane to include the content of files containing the output of hc report terms and hc report entities. Raintale then includes these values inside the main metadata key. Not shown is the output of the --extradata option, which allows data from other JSON-formatted files to be inserted into the Raintale story.

Raintale compares the keys in the JSON output to the template submitted to its tellstory command. If the keys match variables in the template, then they are included in the rendered story. With the exception of the sample command, the other commands are also used each day in our SHARI process to summarize the day's biggest news story.

Synthesizing WARCs for Archives Unleashed Toolkit

My work includes exploring new algorithms for corpus summarization that is then visualized via storytelling. I also have to devise user studies to determine how these summaries improve a human's understanding of a given collection. For both of these task, I must explore collections in detail. Even though Hypercane contains various reports, the Archives Unleashed Toolkit (AUT) provides many of the tools I need to perform this exploration. Unfortunately, AUT focuses on WARC files and I do not have access to the WARC files of the collections I would like to study. Thus, I included the ability for Hypercane to synthesize the HTML files from an Archive-It collection into a directory of WARC files.

Hypercane's sythesizing is different from the crawling performed by tools such as Heritrix. The resulting WARCs are a best effort to re-synthesize records as close as possible to the original crawl. Each record in the WARC corresponds to a memento. Hypercane uses the Memento Protocol's

original relation as each WARC record's WARC-Target-URI whereas Heritrix would have used the memento's URI-M. Hypercane also uses the value of the Memento Protocol's Memento-Datetime as the WARC-Date of the record. Finally, Hypercane is aware of augmented and raw mementos. Hypercane favors the content as originally crawled, the raw memento, when inserting the document into each WARC record. This way the resulting analysis avoids being confused by rewritten links, archive banners, and other augmentations.

The command below extracts the seeds from Archive-It collection 13529, discovers their TimeMaps, discovers the mementos linked from those TimeMaps, and synthesizes them into WARCs stored in the directory

13529-warcs:

# hc synthesize warcs -i archiveit -a 13529 -o 13529-warcs

2020-04-27 13:41:59,395 [INFO] hypercane.actions.synthesize: Starting generation of files from input

2020-04-27 13:41:59,396 [INFO] hypercane.identify: processing input for type archiveit

2020-04-27 13:41:59,396 [INFO] hypercane.identify: discovering mementos for input type archiveit

2020-04-27 13:43:19,209 [ERROR] hypercane.identify: Skipping TimeMap http://wayback.archive-it.org/13529/timemap/link/https://jobtube.cn/wv/?from=singlemessage&isappinstalled=0, encountered problem extracting URI-Ms from TimeMap: KeyError('mementos')

Traceback (most recent call last):

File "/Users/smj/.virtualenvs/hypercane/lib/python3.7/site-packages/hypercane/identify/__init__.py", line 143, in download_urits_and_extract_urims

urims = extract_urims_from_TimeMap(timemap_content)

File "/Users/smj/.virtualenvs/hypercane/lib/python3.7/site-packages/hypercane/identify/__init__.py", line 109, in extract_urims_from_TimeMap

for memento in timemap_json_text["mementos"]["list"]:

KeyError: 'mementos'

... omitted for brevity ....

2020-04-27 19:23:32,919 [WARNING] hypercane.synthesize.warcs: non-200 status 400, not saving this URI to WARC: http://wayback.archive-it.org/13529/20200319122547/https://www.yna.co.kr/safe

2020-04-27 19:23:33,393 [WARNING] hypercane.synthesize.warcs: non-200 status 400, not saving this URI to WARC: http://wayback.archive-it.org/13529/20200408002314/https://www.yna.co.kr/safe

2020-04-27 19:24:14,084 [WARNING] hypercane.synthesize.warcs: non-200 status 404, not saving this URI to WARC: http://wayback.archive-it.org/13529/20200325052358/https://www.zdnet.com/article/coronavirus-update-2020-tech-conference-cancellations-and-travel-bans/

2020-04-27 19:24:25,245 [WARNING] hypercane.synthesize.warcs: non-200 status 404, not saving this URI to WARC: http://wayback.archive-it.org/13529/20200328043208/https://yukon.ca/fr/informations-sur-le-coronavirus

2020-04-27 19:24:27,535 [INFO] hypercane.actions.synthesize: Done generating directory of files, output is at 13529-warcs-test

--depth argument that will instruct it to crawl the collection to the specified depth. We will provide more information on the crawling process in Part 3. The -l argument allows you to supply the name of a log file to capture the logging output. This log file will record all issues with downloading or saving TimeMaps or mementos with the severity of [WARNING] or [ERROR].

Once the command is finished writing WARCs to 13529-warcs, we can use Archives Unleashed Toolkit to explore them. Below I executed a Spark Shell and loaded Archives Unleashed Toolkit. I asked Spark to derive 10 URLs from these WARCs. Note that none of these URLs have a domain name of archive-it.org because Hypercane employed the Memento Protocol to discover the original resource URLs and write those values to the WARC.

# ./spark-2.4.5-bin-hadoop2.7/bin/spark-shell --jars aut-0.80.0-fatjar.jar

20/06/02 14:46:36 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Spark context Web UI available at http://nerfherder.lan:4040

Spark context available as 'sc' (master = local[*], app id = local-1591130805207).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 2.4.5

/_/

Using Scala version 2.11.12 (OpenJDK 64-Bit Server VM, Java 1.8.0_252)

Type in expressions to have them evaluated.

Type :help for more information.

scala> import io.archivesunleashed._

scala> RecordLoader.loadArchives("13529-warcs", sc).keepValidPages().map(r => r.getUrl).take(10)

res2: Array[String] = Array(

https://444.hu/tag/koronavirus,

https://ofsp-coronavirus.ch/,

https://www.nih.gov/health-information/coronavirus,

https://www.nhs.uk/conditions/coronavirus-covid-19/,

https://www.ccthd.org/coronavirus-2019ncov,

https://www.swissinfo.ch/ita/virus-wuhan-cina-test-depistaggio-svizzera/45509318,

https://www.coronavirus.gov/,

https://coronavirus.utah.gov/,

http://weekly.chinacdc.cn/news/TrackingtheEpidemic.htm,

https://health.mo.gov/living/healthcondiseases/communicable/novel-coronavirus/

)

scala>

sitelinks-tsv. Expand the example to view the Scala code.

scala> :paste

// Entering paste mode (ctrl-D to finish)

import io.archivesunleashed._

import io.archivesunleashed.udfs._

RecordLoader.loadArchives("13529-warcs", sc)

.webgraph()

.groupBy(

$"crawl_date",

removePrefixWWW(extractDomain($"src")),

removePrefixWWW(extractDomain($"dest")))

.count()

.write.option("delimiter", "\t").csv("sitelinks-tsv/")

// Exiting paste mode, now interpreting.

import io.archivesunleashed._

import io.archivesunleashed.udfs._

scala>

cat sitelines-tsv/* | sort

... omitted for brevity ...

20200305 beyer.house.gov travel.state.gov 2

20200305 beyer.house.gov urldefense.proofpoint.com 6

20200305 beyer.house.gov vdh.virginia.gov 2

20200305 blogs.scientificamerican.com blogs.scientificamerican.com 8

20200305 blogs.scientificamerican.com facebook.com 1

20200305 blogs.scientificamerican.com gettyimages.com 1

20200305 blogs.scientificamerican.com instagram.com 1

20200305 blogs.scientificamerican.com m.facebook.com 1

20200305 blogs.scientificamerican.com partnerships.nature.com 1

20200305 blogs.scientificamerican.com reddit.com 1

20200305 blogs.scientificamerican.com rss.sciam.com 1

20200305 blogs.scientificamerican.com scientificamerican.com 52

20200305 blogs.scientificamerican.com springernature.com 1

20200305 blogs.scientificamerican.com theprepared.com 1

20200305 blogs.scientificamerican.com twitter.com 2

20200305 blogs.scientificamerican.com youtube.com 1

20200305 brookings.edu "" 3

20200305 brookings.edu brookings.edu 234

20200305 brookings.edu brookings.foxycart.com 6

... omitted for brevity ...

We see that a March 5, 2020 memento from blogs.scientificamerican.com had linked to different social networks, partnerships.nature.com, springernature.com, theprepared.com, and its own domain. Note that the crawl dates are from March 5 and not from April 27 when I created these WARCs. While generating the WARCs, Hypercane again used the Memento Protocol to discover the original crawl dates so that our AUT analysis would be similar to one produced by the original Archive-It collection 13529 WARCs.

What if we want to create a mathematical graph of links from the collection? Below I produce graph output by slightly modifying the instructions in the "Export to Gephi" section of AUT's Documentation. Expand the example to view the Scala code.

scala> :paste

// Entering paste mode (ctrl-D to finish)

import io.archivesunleashed._

import io.archivesunleashed.udfs._

import io.archivesunleashed.app._

val webgraph = RecordLoader.loadArchives(

"13529-warcs", sc)

.webgraph()

val graph = webgraph.groupBy(

$"crawl_date",

removePrefixWWW(extractDomain($"src")).as("src_domain"),

removePrefixWWW(extractDomain($"dest")).as("dest_domain"))

.count()

.filter(!($"dest_domain"===""))

.filter(!($"src_domain"===""))

.filter(($"src_domain"==="blogs.scientificamerican.com"))

.orderBy(desc("count"))

.collect()

WriteGEXF(graph, "links-for-gephi-0.90.0.gexf")

// Exiting paste mode, now interpreting.

import io.archivesunleashed._

import io.archivesunleashed.udfs._

import io.archivesunleashed.app._

webgraph: org.apache.spark.sql.DataFrame = [crawl_date: string, src: string ... 2 more fields]

graph: Array[org.apache.spark.sql.Row] = Array([20200411,blogs.scientificamerican.com,scientificamerican.com,88], [20200404,blogs.scientificamerican.com,scientificamerican.com,88], [20200314,blogs.scientificamerican.com,scientificamerican.com,86], [20200305,blogs.scientificamerican.com,scientificamerican.com,52], [20200329,blogs.scientificamerican.com,scientificamerican.com,44], [20200408,blogs.scientificamerican.com,scientificamerican.com,44], [20200409,blogs.scientificamerican.com,scientificamerican.com,44], [20200403,blogs.scientificamerican.com,scientificamerican.com,44], [20200407,blogs.scie...

scala>

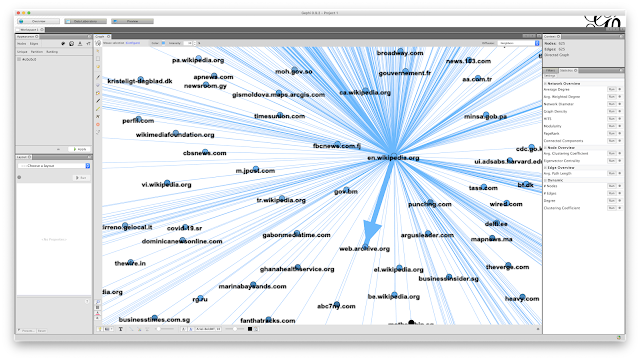

We can render the resulting file with Gephi. The thickness of the edges indicates how many links occur between two domains. Here we see that scientificamerican.com links mostly to itself, with fewer links out to social media and other sites.

|

| The output of our blogs.scientificamerican.com Archives Unleashed query is shown rendered in Gephi. |

What other link relationships can we explore? By changing the query to include all pages from en.wikipedia.org, we see that most of the links from wikipedia.org go to archive.org. This is likely a result of Wikipedia's URL rewriting effort.

These kinds of insights are not supported by Hypercane. I also do not have access to the WARCs in IIPC's COVID-19 collection. By leveraging the Memento Protocol and having an understanding of raw mementos, Hypercane now provides the bridge to do this and other analysis on any public Archive-It collection, list of TimeMap URI-Ts, list of original resource URI-Rs, or list of memento URI-Ms. The results may vary depending on how well a web archive supports the Memento Protocol or the discovery of raw mementos. Network issues and playback engine issues are also a factor, so this is a best effort attempt to synthesize a collection for analysis.

As we stated in Part 1, Hypercane does not just accept Archive-It collections as input. Any list of TimeMaps, mementos, or even original resources can be fed into hc synthesize.

With this capability I can leverage Archives Unleashed Toolkit to explore collections without developing my own query tools. From such queries I expect to be able to develop new sampling algorithms to include in Hypercane.

Synthesizing boilerplate-free files for Gensim

Most NLP tutorial examples assume that the user has access to plain text files. As I evaluate tools and algorithms for web archive summarization, I often need boilerplate free text files. Hypercane allows us to generate a directory containing these text files so we can then explore them with tools like Gensim. Because boilerpipe scored best during Nwala's boilerplate-removal analysis, Hypercane uses theArticleExtractor class of the boilerpy3 library to extract boilerplate from HTML documents. Just like with WARC output, these boilerplate-free files are built from raw mementos rather than those containing rewritten links and banners.

# hc synthesize bpfree-files -i archiveit -a 13529 -o 13529-files

2020-04-30 15:07:38,553 [INFO] hypercane.actions.synthesize: Starting generation of boilerplate-free files from input

2020-04-30 15:07:38,554 [INFO] hypercane.identify: processing input for type archiveit

2020-04-30 15:07:38,554 [INFO] hypercane.identify: discovering mementos for input type archiveit

2020-04-30 15:08:59,212 [ERROR] hypercane.identify: Skipping TimeMap http://wayback.archive-it.org/13529/timemap/link/https://jobtube.cn/wv/?from=singlemessage&isappinstalled=0, encountered problem extracting URI-Ms from TimeMap: KeyError('mementos')

Traceback (most recent call last):

File "/Users/smj/.virtualenvs/hypercane/lib/python3.7/site-packages/hypercane/identify/__init__.py", line 143, in download_urits_and_extract_urims

urims = extract_urims_from_TimeMap(timemap_content)

File "/Users/smj/.virtualenvs/hypercane/lib/python3.7/site-packages/hypercane/identify/__init__.py", line 109, in extract_urims_from_TimeMap

for memento in timemap_json_text["mementos"]["list"]:

KeyError: 'mementos'

2020-04-30 15:13:10,489 [INFO] hypercane.identify: discovered 23599 URIs

2020-04-30 15:13:10,495 [INFO] hypercane.actions.synthesize: discovered 23376 URI-Ms from the input

2020-04-30 15:13:10,504 [INFO] hypercane.utils: returing boilerplate free content from cache for http://wayback.archive-it.org/13529/20200327232109/http://chranimnejslabsi.cz/

2020-04-30 15:13:10,506 [INFO] hypercane.actions.synthesize: writing out data for URI-M http://wayback.archive-it.org/13529/20200327232109/http://chranimnejslabsi.cz/

2020-04-30 15:13:10,530 [INFO] hypercane.utils: returing boilerplate free content from cache for http://wayback.archive-it.org/13529/20200327232250/https://chranimnejslabsi.cz/

2020-04-30 15:13:10,532 [INFO] hypercane.actions.synthesize: writing out data for URI-M http://wayback.archive-it.org/13529/20200327232250/https://chranimnejslabsi.cz/

2020-04-30 15:13:10,534 [INFO] hypercane.utils: returing boilerplate free content from cache for http://wayback.archive-it.org/13529/20200329074235/https://chranimnejslabsi.cz/

2020-04-30 15:13:10,535 [INFO] hypercane.actions.synthesize: writing out data for URI-M http://wayback.archive-it.org/13529/20200329074235/https://chranimnejslabsi.cz/

2020-04-30 15:13:10,537 [INFO] hypercane.utils: returing boilerplate free content from cache for http://wayback.archive-it.org/13529/20200330120210/http://www.chranimnejslabsi.cz/

2020-04-30 15:13:10,539 [INFO] hypercane.actions.synthesize: writing out data for URI-M http://wayback.archive-it.org/13529/20200330120210/http://www.chranimnejslabsi.cz/

... truncated for brevity ....

2020-04-30 16:20:49,198 [INFO] hypercane.utils: returing boilerplate free content from cache for http://wayback.archive-it.org/13529/20200323103313/https://zpravy.aktualne.cz/zahranici/online-koronavirus-ve-svete-lekari-proveruji-prvni-dva-pripa/r~68fa1c18410911eaac760cc47ab5f122/

2020-04-30 16:20:49,211 [INFO] hypercane.actions.synthesize: writing out data for URI-M http://wayback.archive-it.org/13529/20200323103313/https://zpravy.aktualne.cz/zahranici/online-koronavirus-ve-svete-lekari-proveruji-prvni-dva-pripa/r~68fa1c18410911eaac760cc47ab5f122/

2020-04-30 16:20:49,213 [INFO] hypercane.utils: returing boilerplate free content from cache for http://wayback.archive-it.org/13529/20200329024240/https://zpravy.aktualne.cz/zahranici/online-koronavirus-ve-svete-lekari-proveruji-prvni-dva-pripa/r~68fa1c18410911eaac760cc47ab5f122/

2020-04-30 16:20:49,307 [INFO] hypercane.actions.synthesize: writing out data for URI-M http://wayback.archive-it.org/13529/20200329024240/https://zpravy.aktualne.cz/zahranici/online-koronavirus-ve-svete-lekari-proveruji-prvni-dva-pripa/r~68fa1c18410911eaac760cc47ab5f122/

2020-04-30 16:20:49,325 [INFO] hypercane.actions.synthesize: Done generating directory of boilerplate-free files, output is at ../hypercane-testing-outputs/13529-files

This produces a directory containing one file per memento with its boilerplate removed as well as a file named metadata.tsv containing a mapping of these files to their original URI-Ms.

# ls 13529-files

... top truncated for brevity ...

7f59a7329c54fdeb200596a879cdc836.dat ffee8bb90699cdfdf4a8d2ff9e6ca93b.dat

7f5d984d2a9169d6e79a60174008580f.dat ffeea61bdb1dc412397855c831a7861b.dat

7f644b5846d760e8d5da5711e3f8bddd.dat fff6da687900dc0ddf6e56718bbed4ba.dat

7f688694ef334388be7454809aeb911e.dat fff949be304249f3c17f6c092b383127.dat

7f69b217266761ddd9efff1ef0b0e29c.dat fffa625016493f2befef2c20b0175756.dat

7f6d4f80a1f19f36e2af99068f162755.dat fffc1819552f573130a7e3b08f1a8f1a.dat

7f6f4060658e2b12605182f7c1b65dbf.dat fffc63a76e8c0dcdff9dc924e821ec2c.dat

7f6f9db8c909cee4debc4c346c6e37fc.dat fffe96822246c1ab0bb7f5dd7151fea9.dat

7f70d8f1a2493fe5c3d219a64b4e87f1.dat metadata.tsv

7f76e3c4a7afad443f1d8605b92cd079.dat

# ipython

Python 3.7.7 (default, Mar 10 2020, 15:43:33)

Type 'copyright', 'credits' or 'license' for more information

IPython 7.11.1 -- An enhanced Interactive Python. Type '?' for help.

In [1]: import os

In [2]: from langdetect import detect

In [3]: from langdetect.lang_detect_exception import LangDetectException

In [4]: from collections import defaultdict

...: from gensim import corpora

In [5]: from stop_words import get_stop_words

In [6]: documents = []

In [7]: for filename in os.listdir('13529-files'):

...: if filename == 'metadata.tsv':

...: continue # skip metadata file

...:

...: with open('13529-files/{}'.format(filename)) as f:

...: data = f.read()

...: try:

...: lang = detect(data)

...: if lang == 'en':

...: documents.append( data )

...: except LangDetectException:

...: pass # skip errors

...:

In [8]: stoplist = get_stop_words('english')

In [9]: stoplist.append('will')

In [10]: stoplist.append('can')

In [11]: stoplist.extend([ char for char in string.punctuation ])

In [12]: stoplist.extend([ char for char in string.digits ])

In [13]: texts = [

...: [word.strip(string.punctuation) for word in document.lower().split() if word not in stoplist and len(word) > 1]

...: for document in documents

...: ]

In [14]: # remove words that appear only once

...: frequency = defaultdict(int)

...: for text in texts:

...: for token in text:

...: frequency[token] += 1

...:

...: texts = [

...: [token for token in text if frequency[token] > 1]

...: for text in texts

...: ]

In [15]: dictionary = corpora.Dictionary(texts)

...: corpus = [dictionary.doc2bow(text) for text in texts]

...:

In [16]: from gensim import models

In [17]: model = models.LdaModel(corpus, id2word=dictionary, num_topics=10)

In [18]: import matplotlib.pyplot as plt

In [19]: from wordcloud import WordCloud

In [20]: for t in range(model.num_topics):

...: plt.figure()

...: d = {}

...: for k, v in model.show_topic(t, 200):

...: d[k] = v

...: plt.imshow(WordCloud().fit_words(d))

...: plt.axis("off")

...: plt.title("Topic #" + str(t))

...: plt.savefig("Figure_" + str(t) + ".png")

|

| Ten LDA topics from Archive-It collection 13529, as it was in April 2020, modeled as word clouds. |

Summary and Discussion

Hypercane's principal focus is providing a small sample of documents from a collection. As we add new functionality, we must rely upon third-party tools that require accept other formats. Thesynthesize action exists to help us generate output in these formats for these tools. For our storytelling efforts, Hypercane produces output to be consumed by Raintale. For our exploration efforts, it supports formats such as WARC and text files. We may add additional output types in the future as needed.

In the case of text files and WARCs, the synthesized output is a best effort to extract content from the mementos in the collection. Currently the output only represents HTML pages. Only mementos that could be discovered and downloaded from the web archive are available. If the web archive's playback engine is malfunctioning then the content of these WARCs may be incomplete.

These best-effort capabilities of

synthesize make Hypercane complementary to other tools in the web archivist's toolkit. With these different outputs the archivist can explore collections in new ways. In the next post we will show how they can employ Hypercane's other actions to generate their own algorithms to produce a representative sample. Once they have that sample, they can tell a story with whatever tool they choose next.

-- Shawn M. Jones

Acknowledgements:

I would like to thank Ian Milligan and Nick Ruest for their feedback on this blog post.

Comments

Post a Comment