2020-06-08: Who is that person in the picture? Or, how Python, and Haar can add value to an image.

(Sung to the tune of "How Much is that Doggie in the Window")

Who is that person in the picture?

The one with the light brown hair.

Who is that person in the picture?

I do hope that someone would share.

Introduction

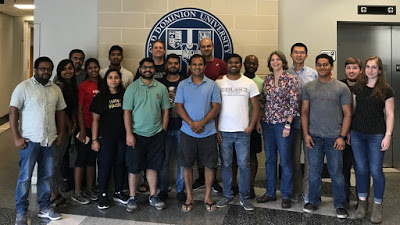

Often times when a group gets together, for whatever reason, there will be a group picture at the end to commemorate the good times had by all. If this "sea of faces" gets published, in hard or soft copy, there may be a one or two line caption giving the name of the group and perhaps where and when the image was created. Six months or a year later, the image has only marginal value to the people who were there, and almost no value to those who were not there, because it is just a sea of faces.

We are interested in finding a low cost (very little human time) method of providing a way to add value to the soft copy of the image, so the image will have greater value later. We have developed a Python script that uses a Haar facial detection cascade to create a clickable HTML image map. The image map can be incorporated into other HTML pages to support a dynamic and valuable web experience.

(Links to source code, worked examples, a video showing program execution, and full documentation are at the end of this write-up.)

Purpose

Picking out faces in a crowded image to create an image map can be time consuming and error prone. An automated, or semi-automated tool that can assist a human in this type of tedious task would be beneficial. The utility and usability of current online sites varies considerably.

Our purpose is to automate the heavy lifting as much as possible, to keep the end product as agnostic as possible, and to minimize the time a human spends "diddling" the image to a minimum. Towards those ends, we:

1. Decided to use a Haar cascade facial detection approach because it is fast and well known,

2. Decided to use Python 3 because it available for both Windows and Unix distributions,

3. Decided to use native image viewers to support the facial detection process, and

4. Tried to make the application as simple as possible.

1. Decided to use a Haar cascade facial detection approach because it is fast and well known,

2. Decided to use Python 3 because it available for both Windows and Unix distributions,

3. Decided to use native image viewers to support the facial detection process, and

4. Tried to make the application as simple as possible.

Finding faces

Detecting faces depends on several factors and actions acting together. We will start with a quick over view of the program internals, followed by a short example.

Our Python 3 script uses the cv2.CascadeClassifier as the heavy lift engine for detecting faces using a Haar front face cascade. The script was written, and tested on a Ubuntu Linux distribution, and should run without changes in a Windows environment.

Image processing and facial detection has two distinct phases:

1. Detecting "face-like" pixel patterns in the image.

1. Detecting "face-like" pixel patterns in the image.

2. Accepting, or rejecting the detections.

Detecting faces

The Haar detector works on pixels, and their relationship to pixels around them. If adjacent pixels don't have the relationship that the Haar detector is expecting then the pixels are rejected. When a human looks at an image, the human may see patterns there that Haar does not. Either the pixel pattern is the wrong size, or the differences in the pixels are not distinct enough.

The Haar detector works on pixels, and their relationship to pixels around them. If adjacent pixels don't have the relationship that the Haar detector is expecting then the pixels are rejected. When a human looks at an image, the human may see patterns there that Haar does not. Either the pixel pattern is the wrong size, or the differences in the pixels are not distinct enough.

For our purposes, we look at the pixel patterns being the wrong size. So we change the size of the image, run the detector again, and evaluate the results. The software changes a copy of the original image by 10% (within the bounds of integer math).

|

| Iteration #01 of facial detection. Original image. No faces detected. |

|

| Iteration #02 of facial detection. Increased image size by 10%, 2 faces detected. (The processed image is greater than previous image, even though they appear the same size on the page.) |

|

| Iteration #03 of facial detection. Increased image size by 10%, 6 faces detected. (The processed image is greater than previous image, even though they appear the same size on the page.) |

|

| Iteration #04 of facial detection. Increased image size

by 10%, 14 faces detected. (The processed image is greater than previous

image, even though they appear the same size on the page.) |

|

| Iteration #05 of facial detection. Increased image size

by 10%, 17 faces detected. (The processed image is greater than previous

image, even though they appear the same size on the page.) |

Accepting faces

After an acceptable number of faces have been detected, the user is queried about each detection as to whether or not the detected area represents a face.

The mechanics are:

1. The original image is presented with the current candidate area outlined.

2. The user is asked whether or not to accept the area. A "y" means yes. A "n" means no.

3. If the use elects to accept the area:

1. The original image is presented with the current candidate area outlined.

2. The user is asked whether or not to accept the area. A "y" means yes. A "n" means no.

3. If the use elects to accept the area:

(a) Information is asked about the accepted area.

(b) Information is written to an HTML file.

(c) The area is outlined on a temporary copy of the image.

4. If the user elects to not accept the area, nothing happens.(b) Information is written to an HTML file.

(c) The area is outlined on a temporary copy of the image.

5. If the user elects to end the program ("e") then the HTML file is finalized and the program ends.

6. Otherwise, the next area is queried, and the process continues.

A few of these operations are displayed below. The process is repeated in

the same manner for all detection areas.

the same manner for all detection areas.

|

| Query detection area #01. |

|

| Acceptance of detection area #01. |

Numerous queries and acceptances later.

|

| Query of detection area #06. |

|

| Acceptance of detection area #06. |

The process repeats until all detected areas are accepted or rejected.

Future work

The purpose of this exploration was to see how to do the "heavy lifting" of detecting faces in a "sea of faces" and then to demonstrate doing something with those detections.

The base program could be expanded in these areas:

1. Replace the "onclick" URL in the area tag with a direct link.

2. Integrate the basic Python functionality into a larger GUI based application.

3. Remove the need for outside image viewers to see the intermediate images.

1. Replace the "onclick" URL in the area tag with a direct link.

2. Integrate the basic Python functionality into a larger GUI based application.

3. Remove the need for outside image viewers to see the intermediate images.

Conclusion

We started our exploration looking to reduce the number of man-hours it took to take a "sea of faces" and make it into something that the viewer could drill down into. We used a Haar based cascade detector in a Python script to detector "faces." Depending on the size of the "faces" the detector would return "true" or "false" faces. The script allowed us to evaluate each of these detections, and select the ones that we wanted to accept as "real" faces. Finally the script created a web page where we could click on a face and find out something about the face. The web page source can, and should be modified and then included into a final page so that users can find single face in a sea of faces, and be told something about the face.

External links

The Python code and the frontal face XML files are needed to replicate the actions described in this post.

We hope you have as much fun with this software as we did putting it together.

-- Chuck Cartledge ( ccartled@cs.odu.edu )

8 June 2020

Comments

Post a Comment