2018-07-15: How well are the National Guideline Clearinghouse and the National Quality Measures Clearinghouse Archived?

On July 13, I saw this on Twitter:

There are two US government websites in danger, the National Guideline Clearinghouse (On Monday, doctors, nurses and researchers will lose access to a trove of medical data, as the Trump Administration shuts down the National Guidelines Clearinghouse. See our piece today, by our Senior Investigator @j0ncampbell, in @TheDailyBeast: https://t.co/Qz1tkRIQIM— Sunlight Web Integrity Project (@SunWebIntegrity) July 12, 2018

https://www.guideline.gov) and the National Quality Measures Clearinghouse (https://qualitymeasures.ahrq.gov). Both store medical guidelines. Both will "not be available after July 16, 2018". According to the linked Daily Beast article above:

Medical guidelines are best thought of as cheatsheets for the medical field, compiling the latest research in an easy-to-use format. When doctors want to know when they should start insulin treatments, or how best to manage an HIV patient in unstable housing — even something as mundane as when to start an older patient on a vitamin D supplement — they look for the relevant guidelines. The documents are published by a myriad of professional and other organizations, and NGC has long been considered among the most comprehensive and reliable repositories in the world.

The Sunlight Foundation Web Integrity Project wrote a report about the archivabilty of this service. They note that "interactive features do not function, making archived content much more difficult to access and, in many cases completely unavailable." Seeing as web archives typically crawl websites from the client side and have no access to the server components, I expect that the search functionality of the web sites should not work once archived.

Update on 2018/07/25 at 22:56 GMT: The Government Info Librarian Blog covers the shutdown of these sites as well.

Update on 2018/07/30 at 21:17 GMT: Jennifer Hinkel covers where else one can find clinical guidelines now that these sites are gone.

The robots.txt for www.guideline.gov disallows everyone:

The robots.txt for qualitymeasures.ahrq.gov disallows everyone:

In December of 2016, the Internet Archive stopped honoring robots.txt for .gov and .mil websites, hoping to "keep this valuable information available to users in the future". Seeing at these two sites will be shut down on July 16, 2018, how well are they archived?

Experiment Setup

The method I used to evaluate how much of each site was archived consisted of the following general steps:

- Acquire a sample of original resource URIs from

www.guideline.govandqualitymeasures.ahrq.gov - Use a Memento Aggregator to determine if each original resource has at least one memento

I created a GitHub repository to save my work. Due to the time crunch, I did not organize it nicely and it will be updated in the coming days with more content used in this article, so check back to it often if interested.

Update on 2018/07/16 at 20:37 GMT: The GitHub repository is now as stable as it is going to be. As it was written over the course of 3 days, the code is very, very rough. I have no intentions of improving it, but the data and code is provided for anyone who is interested. Feel free to contact me on Twitter with any questions.

After acquiring sample original resource URIs to test I installed MemGator, a Memento Aggregator developed by the WS-DL Research Group. I wrote a Python script which requested an aggregated TimeMap from MemGator for each original resource URI and recorded the number of mementos per URI.

So, what categories of documents did I retrieve before feeding them into MemGator?

Main Products - Summaries

I reviewed the menus across the top of each site's home page. I discovered that the main product of

www.guideline.gov appeared to be the guideline summaries and the main product of qualitymeasures.ahrq.gov appeared to be measure summaries. I focused on these documents because, if captured as mementos, an enterprising archivist could build their own search engine around them.As shown in the screenshot below, these summaries were accessible via paginated search result pages. Fortunately, there is an "All Summaries" option which will list all summaries as a series of search results.

The

qualitymeasures.ahrq.gov site also has its own "All Summaries" page, shown in the screenshot below, so these URIs can be scraped using a script aware of the paging as well.As Corren wrote last year, pagination can result in a missed captures. Knowing this, I wondered if the pagination would have an impact on if the guideline summaries were archived.

I wrote some simple (and very rough) code in Python using the requests library and BeautifulSoup to scrape all URIs from each search result page. The same script was used to scrape both sites. For both sites I selected the guideline summary URIs, identified because they contained the string "/summaries/summary/", and removed duplicates. This gave me 1415 original URIs for

www.guideline.gov and 2533 original URIs for qualitymeasures.ahrq.gov.Expert Commentaries

Both sites also contained expert commentaries about these summaries. I decided that this also looked important, even though these commentaries did not appear to be indexed by the search engine.

|

A screenshot of the Expert Commentaries page on www.guideline.gov |

|

A screenshot of the Expert Commentaries page on qualitymeasures.ahrq.gov |

www.guideline.gov and 52 URIs for qualitymeasures.ahrq.gov.Guideline Syntheses

The

www.guideline.gov site has a series of documents labeled guideline synthesis documenting "areas of agreement and difference, the major recommendations, the corresponding strength of evidence and recommendation rating schemes, and a comparison of guideline methodologies". These documents also seemed to be important, so I chose to include them as well.

|

The Guideline Synthesis page at www.guideline.gov is another set of documents provided by the web site. |

I wrote a script to scrape this page for all guideline synthesis URIs. This led me to 18 URIs for

www.guideline.gov. The qualitymeasures.ahrq.gov site did not contain this type of document.Summaries in other formats

In addition to the HTML formatted guideline summaries, there were guideline summaries available in PDF, XML, and DOC format on

www.guideline.gov. I wrote another script to iterate through all of the summary pages captured in the previous section and save off the PDF, XML, and DOC URIs. The qualitymeasures.ahrq.gov website only has HTML formatted measure summaries, so this document category does not apply to that site.

|

This screenshot demonstrates the multiple formats available for a guideline summary on www.guideline.gov. |

My script to scrape these pages gave me 4185 URIs for

www.guideline.gov.Other Pages

Finally, I was curious about what may have been missed elsewhere. I decided to try to gather URIs as a crawler would who is given the seed of the top level page. With this exercise, I was hoping to gather a number of top level pages to see how their archive status differed from the guideline summaries, expert commentaries, guideline syntheses, and the measure summaries.

I wrote two simple spiders (crawlers) using the Python crawling framework scrapy. I pointed each spider at the homepage of each website, instructed it not to crawl outside of the domain of each site, and told it to print out any URI listed on a page it discovered while crawling. Unfortunately, I ran it on a machine with insufficient memory. The operating system killed scrapy in both cases because it was consuming too much memory. This means that the crawl for

www.guideline.gov ran for 4 hours while the crawl for qualitymeasures.ahrq.gov ran for 7 hours. This inconsistency in crawl times was disappointing, but I kept the URIs from these crawls because they provide an interesting contrast in the results section.

Once I had a list of URIs linked from pages encountered during the crawl, I then removed all URIs that were not in the domains of

www.guideline.gov or qualitymeasures.ahrq.gov, respectively.

Hundreds of thousands of URIs returned were related to search facets. The crawl of

www.guideline.gov returned 894,881 such URIs while the crawl of qualitymeasures.ahrq.gov returned 1,474,516. Because these search facet URIs were related to the summaries from the prior sections, I removed these search URIs in the interest of time and only focused on the other pages crawled because these other pages contained actual content. I removed any URIs containing fragments (i.e. hashes like #introduction). I also filtered the URIs for summaries, guideline syntheses, and expert commentaries so that there would be no overlap in results.

I then fed the URIs through MemGator to see if the pages were captured.

Results and Discussion

The table below shows the results of testing if a page was archived for

www.guideline.gov. Of those URIs recorded for this experiment, 98.8% of them were indeed archived, which is good news.

www.guideline.govPage Category |

Archived | Not Archived | Total |

|---|---|---|---|

| Guidelines Summaries (HTML) | 1401 | 14 | 1415 |

| Expert Commentaries | 45 | 0 | 45 |

| Guideline Syntheses | 18 | 0 | 18 |

| Guideline Summaries (Other Formats) | 4185 | 57 | 4242 |

| Other Pages | 150 | 2 | 152 |

| Total | 5799 (98.8%) |

73 (1.2%) |

5872 |

Most importantly, of the 1415 guideline summaries from

www.guideline.gov, 1401/1415 (99.0%) are archived. Only 14/1415 (1.0%) are not archived. Also, all 45 expert commentaries and all 18 guideline syntheses are archived. This means that almost all of the important site content is preserved and an enterprising archivist can build a search engine around them in the future.

The table below shows the results of testing if a page was archived for

qualitymeasures.ahrq.gov. Of the URIs recorded for this experiment, 97.5% of them were archived.

qualitymeasures.ahrq.govPage Category |

Archived | Not Archived | Total |

|---|---|---|---|

| Measures Summaries | 2509 | 24 | 2533 |

| Expert Commentaries | 52 | 0 | 52 |

| Other Pages | 90 | 44 | 134 |

| Total | 2651 (97.5%) |

68 (2.5%) |

2719 |

Of the 2533 measure summaries from

qualitymeasures.ahrq.gov, 2509/2533 (99%) are archived. Only 24/2533 (0.9%) were not archived. Also, all 52 expert commentaries are archived. Again, this means that the majority of the important documents exist in a web archive and can be indexed by a potential search engine in the future. The picture is not so good for the other pages category, where only 90/134 (67.2%) of the pages exist in a web archive.The high overall numbers are remarkable and likely a result of the Internet Archive's efforts to remove the robots.txt restrictions at the end of 2016. The next sections answer additional questions.

What is the distribution of mementos per category per site?

Below several histograms show the distribution of memento counts across the the different categories of pages for

www.guideline.gov. Note that this only applies to those pages with mementos. |

Histogram of the number of mementos per URI for guideline summaries for www.guideline.gov. Minimum: 1, Maximum: 24, Mode: 8. Note: only pages with mementos were evaluated. |

|

Histogram of the number of mementos per URI for expert commentaries for www.guideline.gov. Minimum: 9, Maximum: 14, Mode: 11. Note: only pages with mementos were evaluated. |

|

Histogram of the number of mementos per URI for guideline syntheses for www.guideline.gov.Minimum: 9, Maximum: 18, Mode: 14. Note: only pages with mementos were evaluated. |

|

Histogram of the number of mementos per URI for non-HTML guideline summaries for www.guideline.gov. Minimum: 1, Maximum: 13, Mode: 9. Note: only pages with mementos were evaluated. |

|

Histogram of the number of mementos per URI for other pages for www.guideline.gov.Minimum: 1, Maximum: 2072, Mode: 1. Note: only pages with mementos were evaluated. |

Below several histograms show the distribution of memento counts across the the different categories of pages for

qualitymeasures.ahrq.gov. |

Histogram of the number of mementos per URI for measure summaries for qualitymeasures.ahrq.gov.Minimum: 1, Maximum: 15, Mode: 4. |

|

Histogram of the number of mementos per URI for expert commentaries for qualitymeasures.ahrq.gov.Minimum: 6, Maximum: 7, Mode: 7. |

|

Histogram of the number of mementos per URI for other pages for qualitymeasures.ahrq.gov.Minimum: 2, Maximum 131, Mode: 2. |

The numbers are much lower for

qualitymeasures.ahrq.gov, but they exhibit the same pattern.How does the crawling pattern for mementos change over time per category per site?

So, how does the crawling of

www.guideline.gov change over time? The bar charts below show the number of mementos added to archives per month based on their memento-datetime. |

Memento count per month for guideline summaries for www.guideline.gov. We see a big push in more recent months. |

|

Memento count per month for expert commentaries for www.guideline.gov. There is much the same pattern as for the prior category. |

|

The number of mementos crawled per month for the guideline syntheses documents of www.guideline.gov. There has been a lot of activity the past few months. |

|

Memento count per month for the non-HTML versions of guideline summaries for www.guideline.gov. Again, we see a big push in more recent months. |

|

Memento count per month for other pages at www.guideline.gov. Here we see years of crawling with big spikes after the US election. This may be related to the Internet Archive's new robots.txt policy. |

In the last graph, we see years of crawling the top level pages. This is interesting considering the contents of the robots.txt file. Did it change over time? Was it more permissive at some point? Fortunately, we have web archives we can use to check.

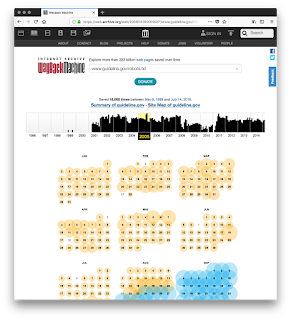

|

Here is a screenshot of the Internet Archive's capture calendar for www.guideline.gov/robots.txt from 2005. Orange indicates that the robots.txt file did not exist. Blue indicates that it did. |

www.guideline.gov until 2005. It was first observed at this site on August 23, 2005 at 22:54:19 GMT. Its contents were as follows:According to the robots.txt specification website, this indicates "To allow all robots complete access". This means that at one time, the site was far more permissive about crawling than it is now. I randomly chose a memento for the robots.txt each year after 2005 and found that it did not change. In August of 2008, the robots.txt disappeared again. In 2009, the successful robots.txt captures are actually of a soft-404 page indicating that it does not exist. Before September 11, 2010 at 18:14:50 GMT, the robots.txt became more complex, as shown below:

As we see, it still isn't disallowing all content like I mentioned at the beginning of the article. This configuration persisted until August 26, 2016 when the robots.txt was still present, but a completely blank file. The robots.txt was changed to its current state on April 27, 2017 before 20:09:28 GMT. The US Senate approved the nomination of Tom Price to the office of Secretary of Health and Human Services on February 1, 2017. This means that the site's robots.txt allowed crawling until after Tom Price took office. This is probably why so many top level pages had been captured by web archives since the site's creation.

What about the

qualitymeasures.ahrq.gov site? The bar charts below show the number of mementos per month for each of its categories.

|

Memento count per month for measure summaries at qualitymeasures.ahrq.gov. There is some activity in 2016, but a lot of very recent crawling of the content. |

|

Memento count per month for measure summaries at qualitymeasures.ahrq.gov. We see the same large push in recent history, with a lot of crawling. |

The crawling of

qualitymeasures.ahrq.gov follows much the same pattern, though not with the exact same spikes prior to this last month. From these graphs we see that there has been a concerted effort to archive both of these sites since June. This site created its first robots.txt on August 24, 2005 before 00:04:23 GMT. And the robots.txt was completely permissive, as with www.guideline.gov. |

The emergence of a robots.txt for qualitymeasures.ahrq.gov on August 24, 2005, as shown on the Internet Archive's calendar page for the URI qualitymeasures.ahrq.gov/robots.txt. |

The robots.txt went through much the same history for this site as for

www.guideline.gov, implying a similar policy or even the same webmaster for both sites. It finally changed to its current disallow state on April 27, 2017 before 22:10:11 GMT. Again, this is after Tom Price took office. This again explains why so many of the top level pages of the site were archived throughout the history of qualitymeasures.ahrq.gov.In which archives are these pages preserved?

I chose to use an aggregator because I wanted to search in multiple web archives for these pages. How well do these mementos spread throughout the archives? The charts below show the number of mementos per archive for each category of pages at

www.guideline.gov. Only archives containing mementos for a given category are displayed in each chart. |

This chart of the non-HTML versions of the guideline summaries for www.guideline.gov shows 14,846 mementos are preserved in the Internet Archive, but more, 19,044 are preserved in Archive-It. |

|

This chart of the expert commentaries for www.guideline.gov shows that 324 mementos are held by the Internet Archive while 177 are held by Archive-It. |

|

| This chart of the guideline syntheses for www.guideline.gov shows that 189 mementos are held by the Internet Archive while 62 are held by Archive-It. |

While the Internet Archive and Archive-It have most of the mementos, some mementos of the top-level pages of the site are held in other archives. As this is a US government web site, I was surprised that the Library of Congress was not featured more. Archive-It also has more non-HTML guideline summaries than the Internet Archive, indicating a particular effort by some organization to preserve these documents in other formats. Unfortunately, the Archive-It mementos I discovered with MemGator belonged to the collection

/all/ meaning that I have no indication as to which Archive-It collection or organization was preserving the pages.

Update on 2018/07/16 at 18:10 GMT: To find the specific Archive-It collection and collecting organization, Michele Weigle has suggested that one might be able to search the Archive-It collections for these URIs using Archive-It's explore all archives search interface. One would need to use the "Search Page Text" tab. I did try the string www.guideline.gov and discovered 5,906 search results, so this hostname is in the content of some of these pages. I tried using a URI reported to have an Archive-It memento, but did not receive any search results. If you are successful, please say something in the comments.

The bar charts below show the distribution of mementos across web archives for the

qualitymeasures.ahrq.gov web site.

|

This chart of the measure summaries for qualitymeasures.ahrq.gov shows 9,494 mementos at the Internet Archive and only 216 at Archive-It. |

|

This chart of the measure summaries for qualitymeasures.ahrq.gov shows all 360 mementos of expert commentaries are held by the Internet Archive. |

The results for

qualitymeasures.ahrq.gov show that most of the mementos for that site are archived at the Internet Archive, with a few in other archives. This is in contrast to the results for www.guideline.gov, where the numbers between the Internet Archive and Archive-It were close in many cases.Attempts at Archiving the Missing Pages

On July 14, 2018, I attempted to use our own ArchiveNow to preserve the ~1% of summary URIs from each site that had not been archived. Unfortunately, the live resources started responding very slowly. The sample of summary URIs that had not been archived produced 500 status codes, as can be seen in the output from the curl commands below, each which took close to a minute to execute:

I ran curl on all live URIs listed as not captured and they return a HTTP 500 status as of July 14, 2018 at approximately 16:50:00 GMT. Because I had scraped these URIs from the "All Summaries" page, it is possible that they returned 500 statuses at the time of crawl and this is why web archives do not currently have them. This means, that, even on the live web, they were not available. The live versions of the other summary pages with mementos returned a 200 status (after about a minute delay).

It is also possible that the service at these web sites is degrading in their last hours. As of approximately 07:00 GMT on July 15, 2018, the

qualitymeasures.ahrq.gov site was no longer available, displaying error messages for pages, as shown in the screenshot below. |

As of 07:00 GMT on July 15, 2018, the qualitymeasures.ahrq.gov website started displaying error messages instead of content. |

Update on 2018/07/16 at 19:00 GMT: The websiteThis was quite disheartening, because my plan was to archive the pages I had detected as missing after I did my initial study. I thought I had until July 16 to save the web pages!qualitymeasures.ahrq.govis available again, but the measure summaries that were missing from the archives still return HTTP 500 status codes. The missing guideline summaries forwww.guideline.govalso still return HTTP 500 status codes.

Conclusion

Almost all web archiving is done externally, with no knowledge of the software running on the server side. This reduces mementos to a series of observations of pages rather than a complete reproduction of all of the functionality that existed at a web site. In the case of two US government websites that will be shut down on July 16, 2018,

www.guideline.gov and qualitymeasures.ahrq.gov have server-side functionality, but their most valuable assets are a series of summary documents that can be captured without having to reproduce the functionality of the server side. In this article, I've tried to determine how much of these web sites have been captured prior to their termination.When focusing on the main products of each site, the guideline summaries and the measure summaries, we see that these products are actually pretty well archived, at 99% of guideline summaries for

www.guideline.gov and 99% of measure summaries for qualitymeasures.ahrq.gov. We also observed that 100% of all expert commentaries were archived in both cases. Other aspects of the site, such as trying to reproduce all facets of the search engine were not tested. I did, however, attempt to crawl the sites to gain a list of pages outside of these categories and discovered that, at least among the pages captured during a limited crawl, other pages at www.guideline.gov are archived at a percentage of 99%, higher than those for qualitymeasures.ahrq.gov, which only stand at 67.2%.

Many of these main products have more than one memento and as many as 25 in some cases. There are more mementos for

www.guideline.gov than for qualitymeasures.ahrq.gov, but the mode for the number of mementos of the main products range between 4 and 14 mementos. This means that the main products have good coverage. The top-level content at these sites, however, has a mode of 1 or 2 mementos, indicating poor coverage of the changes over time for some top-level pages.

Over the life of these sites, most of the mementos stored in web archives are for the top-level pages, because crawling was permitted by their robots.txt until April 27, 2017, a few months after Tom Price became the Secretary of Health and Human Services. Fortunately, there has been a large push to archive the main products of the site since September of 2016, resulting in many mementos created within the last month.

Most of the mementos for these sites are stored in the Internet Archive. Archive-It has more mementos of the non-HTML versions of guideline summaries for

www.guideline.gov, but its memento count is eclipsed by the Internet Archive in all other cases. After the Internet Archive and Archive-It, there is a long tail of archives for top-level pages, but the number of mementos for each of these archives is less than 100. With the exception of 10 guideline summaries for www.guideline.gov stored in Archive.today, none of the main products of these sites are stored outside of the Internet Archive or Archive-It.

My attempts to archive the pages after running this experiment failed, in large part due to the degradation in service at these web sites. Even though I tried preserving the pages prior to the cutoff date of July 16, 2018, they were no longer reliably available.

Because one needs to know the original resource URI in order to find mementos in a web archive, I have published the URIs I discovered to Figshare. I do this in hopes that someone might build a resource for providing easy access to the content of these sites, especially for medical personnel. If you want to access them, use these links.

Feel free to contact me if you run into problems with these files.

This case demonstrates the importance of organizations like the Sunlight foundation for identifying at risk resources. Also important are the web archives for allowing us to preserve these resources. This case also demonstrates how we can come together and ensure that these resources are preserved. We do need to be concerned that so much of this content is preserved in one place, rather than spread across multiple archives. If a page is of value to you, you have an obligation to archive it and archive it in multiple archives. What web pages have you archived today, so that you, and others, can access their content long after the live site has gone away?

Update on 2018/07/25 at 22:36 GMT: Thank you to James A. Jacobs of University of California San Diego and James R. Jacobs of Stanford University, writing for Free Government Information. They covered this blog post and provided additional analysis.

Update on 2018/09/13 at 11:47 GMT Archive-It has a collection named AHRQ Guidelines, collected by the National Library of Medicine. The collection was created in July of 2018. It is unknown how many of these guidelines exist in this collection because it is private.

--Shawn M. Jones

They've already swapped the HTTP server, now giving a different kind of 500:

ReplyDeletelate Sunday:

$ curl -I "https://www.guideline.gov/summaries/summary/51259/acr-appropriateness-criteria--chronic-ankle-pain"

HTTP/1.1 500

Content-Length: 0

Server: Microsoft-HTTPAPI/2.0

Set-Cookie: ARRAffinity=da7985c62a729352f6a2745ce1af0df3e542bf945932bc766fb35d7c851d7626;Path=/;HttpOnly;Domain=www.guideline.gov

Date: Mon, 16 Jul 2018 01:31:33 GMT

Strict-Transport-Security: max-age=31536000; includeSubDomains;

early Monday:

$ curl -I "https://www.guideline.gov/summaries/summary/51259/acr-appropriateness-criteria--chronic-ankle-pain"

HTTP/1.1 500 Internal Server Error

Cache-Control: private

Content-Length: 276

Content-Type: text/html; charset=utf-8

Server: Microsoft-IIS/10.0

Set-Cookie: ASP.NET_SessionId=zpa1czhpxeb1btvhmaqhrbgd; path=/; HttpOnly

Set-Cookie: ASP.NET_SessionId=zpa1czhpxeb1btvhmaqhrbgd; path=/; HttpOnly

Set-Cookie: NGC=RecentlyViewedContent=51259,51259; expires=Wed, 15-Aug-2018 13:20:14 GMT; path=/; HttpOnly

X-AspNetMvc-Version: 4.0

X-AspNet-Version: 4.0.30319

Request-Context: appId=cid-v1:deb297e9-23b8-4f6e-b376-44111ebc4951

X-Powered-By: ASP.NET

X-Frame-Options: SAMEORIGIN

Content-Security-Policy: frame-ancestors 'none';

Set-Cookie: ARRAffinity=538dcd6ad70f2a936442c3d633e1036eec6cd3cfb1870fe12b5fdc202dd29657;Path=/;HttpOnly;Domain=www.guideline.gov

Date: Mon, 16 Jul 2018 13:21:02 GMT

Strict-Transport-Security: max-age=31536000; includeSubDomains;

I used the search engine of https://www.guideline.gov and found this URI there. Visiting it in Chrome, Safari, or Firefox resulted in a 500 HTTP status with page content of "Error. An error occurred while processing your request." This means that (1) user agent is not a factor, and (2) BeautifulSoup did not mangle the URI when it scraped it. Unfortunately, I think these pages are lost.

ReplyDeleteboth sites now redirecting to: https://www.ahrq.gov/gam/index.html

ReplyDelete$ curl -IL http://guidelines.gov/

HTTP/1.1 301 Moved Permanently

Content-Length: 158

Content-Type: text/html; charset=UTF-8

Location: https://www.ahrq.gov/gam/index.html

Server: Microsoft-IIS/7.5

X-Powered-By: ASP.NET

Date: Tue, 17 Jul 2018 14:03:10 GMT

HTTP/1.1 200 OK

Content-Language: en

Content-Type: text/html; charset=utf-8

ETag: "1531825223-1"

Last-Modified: Tue, 17 Jul 2018 11:00:23 GMT

Link: ; rel="canonical",; rel="shortlink"

Server: Apache/2.4.27 (Red Hat) PHP/7.0.10

X-Content-Type-Options: nosniff

X-Drupal-Cache: HIT

X-Frame-Options: SAMEORIGIN

X-Powered-By: PHP/7.0.10

X-UA-Compatible: IE=edge,chrome=1

Cache-Control: public, max-age=3600

Expires: Tue, 17 Jul 2018 15:03:10 GMT

Date: Tue, 17 Jul 2018 14:03:10 GMT

Connection: keep-alive

Strict-Transport-Security: max-age=31536000

$ curl -IL https://qualitymeasures.ahrq.gov

HTTP/1.1 301 Moved Permanently

Server: AkamaiGHost

Content-Length: 0

Location: https://www.ahrq.gov/gam/index.html

Date: Tue, 17 Jul 2018 14:04:38 GMT

Connection: keep-alive

HTTP/1.1 200 OK

Content-Language: en

Content-Type: text/html; charset=utf-8

ETag: "1531825223-1"

Last-Modified: Tue, 17 Jul 2018 11:00:23 GMT

Link: ; rel="canonical",; rel="shortlink"

Server: Apache/2.4.27 (Red Hat) PHP/7.0.10

X-Content-Type-Options: nosniff

X-Drupal-Cache: HIT

X-Frame-Options: SAMEORIGIN

X-Powered-By: PHP/7.0.10

X-UA-Compatible: IE=edge,chrome=1

Cache-Control: public, max-age=3600

Expires: Tue, 17 Jul 2018 15:04:38 GMT

Date: Tue, 17 Jul 2018 14:04:38 GMT

Connection: keep-alive

Strict-Transport-Security: max-age=31536000