2011-04-13: Implementing Time Travel for the Web

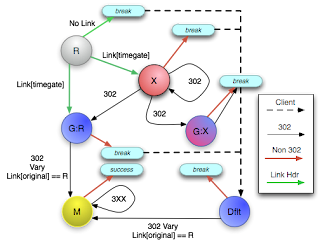

Recent trends in digital libraries are towards integration with the architecture of the World Wide Web . The award-winning Memento Project proposes extending HTTP to provide protocol-level access to mementos (archived previous states) of web resources. Using content negotiation and other protocol operations, rather than archive-specific methods, Memento provides the digital library and preservation community with a standardized method to navigate between the original resource and its mementos. Memento Client State Chart The ODU Web Sciences and Digital Libraries Research Group has partnered with the LANL Research Library to create Memento and develop prototype Memento-compliant client and server implementations. A variety of Memento clients have been created, tested, and co-evolved along with the Memento protocol. There is now a FireFox extension , Internet Explorer browser helper object, and WebKit -based Android browser . The design and technical solutions identified during the d...